Flask BIG and small

Flask is nice, but it’s good only for small applications. For anything bigger you should use Django.

– That Guy, at literally any Flask discussion.

I’ve been using Flask for a few months already, and coming from a background of ““enterprise”” development with the .NET framework, it has really been like a breath of fresh air for me. When you use Flask (or Python in general, really), you tend to focus on a more pragmatic approach to problem solving for some, yet unknown to me, reason.

Though Flask is often seen as some toy apt only for small applications, I’ve found that with proper blueprint usage, you can build web applications of arbitrary complexity with ease. How to do so, and how to get a headstart with cookiecutter-flask-mpa are the topics of this blog post.

Objectives and Success Criteria

Any design must start with clear objectives. Ours are:

- Testing: If it cannot be tested, it’s broken. We care about being able to our application in a outside-in-TDD (double loop) way. More often than not, testing things with the Flask test client is enough, so the default design doesn’t consider E2E/Functional testing during development.

- Separation of view and application logic: view logic is about routes, HTTP request/responses, form validation, CSRF protection, and so on. Application logic on the other hand is about transactional scopes, workflow orchestration, and the domain model. In practice, this distinction eases testing and would even allow us to operate our application from the REPL. Details about this later.

- Integration with modern frontend tooling: Like it or not, Webpack and friends are here to stay. Flask serves anything in the static directory, which we want to treat as the output directory. If we’re developing, then it should contain source maps and unminified resources. If we’re deploying to production, then it should contain minified assets.

- Usable for small and BIG projects: …without changing the project structure too much.

Why not Django?

Put bluntly, Django is a VERY opinionated framework, and sometimes, you just… don’t agree with those opinions.

I personally don’t like the choice of ORM (Django ORM vs. SQLAlchemy) and templating engine (Django Templates vs. Jinja2), and while they can be changed, doing so is not recommended and may affect whether Django Admin works or not. Django Admin is one of the selling points of Django, so without it what’s the point?

Finally, Django is BIG. There’s a lot of things to learn; the best practices book for Django 1.11, “Two Scoops of Django” surpasses 500 pages alone, and while this is more about me than about the language, I believe that it’s just not worth it.

All that effort in learning tools that in practice cannot be used outside of Django, about all the behind-the-scenes-magic and conventions, about the Django plugin ecosystem, and what you get? You get to use a slow WSGI framework. And if I’m going to use a slow WSGI framework, then at least let it be something simpler. Therefore, Flask. QED.

Project Structure and Implementation Details

In the introduction, we said that the key for BIG Flask applications is the use of blueprints. Quite honestly, this whole post could’ve been summarized in “Q: how 2 flask?? A: blueprint!” and it would be technically correct. But there’s more than that.

Application factory

We use an application factory that optionally can receive a custom configuration object to use for tests. In any place where you need to have access to the Flask app (e.g. to load some configuration value), but where you don’t access to current_app you can just import create_app to get a new Flask app instance.

A real example taken from DNShare: __init__.py. It may not be beautiful, but it shows the create_app method which takes an optional config_override parameter. A self-contained example of the use of config_override is here.

A minimal, complete example:

from flask import Flask

from .blueprints.default import default

def create_app(config_override=None):

app = Flask(__name__, instance_relative_config=True)

if config_override is None:

app.config.from_pyfile('settings.py', silent=True)

else:

app.config.from_mapping(config_override)

app.register_blueprint(default)

return app

Configuration loading

There are two main ways of loading environment specific settings:

- From a settings.py file placed in the instance directory.

- From environment variables, as per The Twelve Factors

All other things being equal, you should prefer the second option. Details follow.

Loading config from settings.py

It may be actually a .ini file, or be called config.py, or whatever. The point is that the config is stored in a file in the instance directory, which isn’t version controlled. During development you have a settings.py with development specific settings, and during deployment the actual settings.py file is created in the instance directory in the production server, somehow.

A real example, again from DNShare, but with fake data:

SECRET_KEY = 'dev'

SQLALCHEMY_DATABASE_URI = 'postgresql://dnshare:devdevdev@postgres:5432/dnshare'

SQLALCHEMY_TRACK_MODIFICATIONS = True

DAILY_REGISTRATION_LIMIT = 10

NUM_PROXIES = 0

MAIL_SERVER = 'smtp.example.com'

MAIL_PORT = 465

MAIL_USE_TLS = False # STARTTLS extension

MAIL_USE_SSL = True

MAIL_USERNAME = 'cristian@example.com'

MAIL_PASSWORD = 'hunter2'

MAIL_DEFAULT_SENDER = 'DNShare.org <cristian@dnshare.org>'

Is there any advantage to using settings.py over environment variables?

Yes. settings.py is just another Python file and can contain arbitrary Python constructs. Environment variables are all strings, so you’ll need to parse them before being able to use them.

Loading config from environment variables

You can get the values of environment variables by calling os.environ.get(name) or os.environ[name]. Then, as said, you would need to parse the string into what you application requires.

To do so, you could add Flask-Env to your production dependencies, or do as I did while writing this section:

import os

def parse_bool(text):

return text.lower() == "true"

def get_env(name, parser=None):

raw_value = os.environ.get(name, default=None)

if not raw_value:

raise KeyError(f'Unable to load "{name}" from environment.')

return parser(raw_value) if parser else raw_value

SECRET_KEY = get_env('SECRET_KEY')

SQLALCHEMY_DATABASE_URI = get_env('SQLALCHEMY_DATABASE_URI')

SQLALCHEMY_TRACK_MODIFICATIONS = get_env('SQLALCHEMY_TRACK_MODIFICATIONS',

parse_bool)

DAILY_REGISTRATION_LIMIT = get_env('DAILY_REGISTRATION_LIMIT', int)

NUM_PROXIES = get_env('NUM_PROXIES', int)

MAIL_SERVER = get_env('MAIL_SERVER')

MAIL_PORT = get_env('MAIL_PORT', int)

MAIL_USE_TLS = get_env('MAIL_USE_TLS', parse_bool) # STARTTLS extension

MAIL_USE_SSL = get_env('MAIL_USE_SSL', parse_bool)

MAIL_USERNAME = get_env('MAIL_USERNAME')

MAIL_PASSWORD = get_env('MAIL_PASSWORD')

MAIL_DEFAULT_SENDER = get_env('MAIL_DEFAULT_SENDER')

It’s equivalent to the previous settings.py, but this time is placed as a sibling of the __init__.py file. An additional, minor, change was required in that file too:

# ...

if config_override is None:

app.config.from_object('dnshare.settings')

else:

# ...

And that’s it. If you don’t use the instance directory for anything else, you may even delete it, and remove the volume setup from your Dockerfile if you were using Docker.

Blueprints

Q: how 2 flask?? A: blueprint!

– Me, literally a few paragraphs ago

While technically we could plaster all views in the create_app function of our application, that would become very unmanageable, very quickly. Our applications, if they’re going to be maintained at all, must use Blueprints.

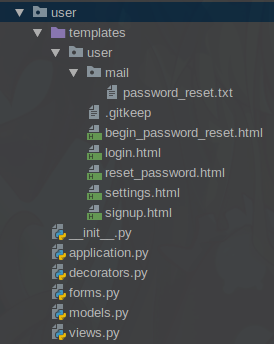

For the most part, there are four modules that are usually found inside a blueprint, and a templates directory:

- views.py

- forms.py

- models.py

- application.py

It’s often a good start to think in terms of those. More modules can be added as needed.

views.py - View Functions

Simplified example taken from DNShare - user blueprint:

from flask import Blueprint, flash, redirect, url_for

from .forms import BeginPasswordResetForm

from .application import send_password_reset_email

user = Blueprint('user', __name__, template_folder='templates')

@user.route('/forgot-password', methods=['GET', 'POST'])

def begin_password_reset():

form = BeginPasswordResetForm()

if form.validate_on_submit():

email = form.email.data

send_password_reset_email(email)

flash('An email has been sent to {0}.'.format(email), 'success')

return redirect(url_for('user.login'))

return render_template('user/begin_password_reset.html', form=form)

At a very high level, a view’s main two tasks are to process requests and to send responses. Request validation is often done by the forms.py module, and the actual work is performed by the application.py module.

The previous example doesn’t consider posible failures when executing the send_password_reset_email, so if an exception is raised, the user would see the Internal Server Error page. Often, we configure our application to log any uncaught exception, so letting unexpected exceptions to explode in our users’ faces may be just what we want. I would advise against that in serious production applications though.

But, what about expected failure cases that we can’t just check in the forms.py module? For example, failures that can only happen when we are executing the action, for which there’s no way of knowing beforehand if they will occur or not?

Take a look at the following snippet taken from here:

# [...]

try:

is_anon = current_user.is_anonymous

register_subdomain(subdomain, domain, host_ip, creator_ip,

None if is_anon else current_user)

except SubdomainAlreadyTaken:

taken_msg = _create_already_taken_msg(subdomain, domain)

flash(taken_msg, 'error')

return redirect(url_for('default.home'))

except RegistrationLimitSurpassed:

flash(TOO_MANY_REGISTRATIONS_MSG, 'error')

return redirect(url_for('default.home'))

except InvalidIPv4HostAddress:

host_error = _create_invalid_host_msg(host_ip)

flash(host_error, 'error')

return redirect(url_for('default.home'))

except InvalidSubdomainError:

error = _create_invalid_subdomain_msg(subdomain)

flash(error, 'error')

return redirect(url_for('default.home'))

# [...] redirect to success page

That’s how. Before you WTF?! at me, please wait a little: we’ll cover the details of the WHY and HOW of the application layer in a following section. It was not a random choice, it wasn’t even a choice made while programming in Python, but in .NET.

forms.py - Request validation

Use Flask-WTF for form validation. It adds some nice features over vanilla WTForms, such as CSRF protection. You may find WTForms-Components and WTForms-Alchemy to be useful too.

Ideally, you would move as much validation as possible inside form validators. Views can get really lean if the only thing you need to do before proceeding with the operation is to call form.validate() and nothing more.

The only notable thing is that if we want to use both WTForms-Components and Flask-WTF’s CSRF protection together, then we either we have to install Flask-Alchemy and use their ModelForm, or just subclass from the following creatura:

class ModelForm(FlaskForm):

"""

wtforms_components exposes ModelForm but their ModelForm does not inherit

from flask_wtf's Form, but instead WTForm's Form.

However, in order to get CSRF protection handled by default we need to

inherit from flask_wtf's Form. So let's just copy his class directly.

We modified it by removing the format argument so that wtforms_component

uses its own default which is to pass in request.form automatically.

"""

# noinspection PyCallByClass

def __init__(self, obj=None, prefix='', **kwargs):

FlaskForm.__init__(

self, obj=obj, prefix=prefix, **kwargs

)

self._obj = obj

For more details about Flask-WTF go read the manual.

models.py - SQLAlchemy models

Here you have a choice. Save your game and choose.

+--------------------------+

| Use vanilla SQLAlchemy |

+--------------------------+

+--------------------------+

| Use Flask-SQLAlchemy |

+--------------------------+

Just as in those games, you’ll end revisiting the choice later anyways. Each of these have their own advantages, and they’re for the most part, mutually exclusive. Choosing Flask-SQLAlchemy is a convenient choice, but vanilla SQLAlchemy is required for a strict separation of view and application concerns.

Why? Go read the Flask-SQLAlchemy quickstart. I’ll wait.

If you’re attentive enough, you may have noticed that to use Flask-SQLAlchemy you only need to set a Flask app config key value, and that’s it. You don’t need to go around creating the SQLA engine yourself, nor the sessions, you just use them. They’re there for you, as long as you’re operating inside a Flask request context (Introduction into Contexts).

In other words, your application layer is tied to your choice of Flask as web framework, which means that you can’t just change web frameworks, or use your application in the REPL without whose with app.app_context() calls everywhere, and that when doing integration testing with the DB, but not the Flask views, then you have to remember to follow the instructions listed in Introduction into Contexts.Whether this is a problem or not is up to you. Python is all about trade-offs, after all.

If you decide to go with vanilla SQLAlchemy, then care must be placed in not carelessly importing flask.current_app for getting access to configuration values. If you do so, then you’re EVEN WORSE than if you just went for Flask-SQLAlchemy. The most straightforward way is to group your application layer functions into classes, and inject configuration in the constructors.

How much logic goes into the models? How much into the application layer?

I don’t have a one-size-fits-all answer to that, but a nice heuristic: if it contains a lot of workflow logic, then put it in the application layer. If it’s only relevant to a single model, then put it into the model. If it’s both, then use your brain. Or not; you can refactor later anyways.

What about transaction commits?

Those are managed by the application layer, don’t put them into the models. Worse case is that you commit a running transaction inside a model, and inadvertendly commit unexpected data that was not ready for commit. WORST case, the rest of the operation fails after the commit, and now you’ve corrupted your data. CONGRATULATIONS!

Anything else?

Consider forcing the use of timezone aware datetimes. Install pytz for dealing with timezones.

import datetime

from sqlalchemy import DateTime

from sqlalchemy.types import TypeDecorator

class TzAwareDateTime(TypeDecorator):

"""

A DateTime type which can only store tz-aware DateTimes.

Source:

https://gist.github.com/inklesspen/90b554c864b99340747e

"""

impl = DateTime(timezone=True)

def process_bind_param(self, value, dialect):

if isinstance(value, datetime.datetime) and value.tzinfo is None:

raise ValueError('{!r} must be TZ-aware'.format(value))

return value

def __repr__(self):

return 'TzAwareDateTime()'

application.py - Application Layer

Application Layer: Defines the jobs the software is supposed to do and directs the expressive domain objects to work out problems. The tasks this layer is responsible for are meaningful to the business or necessary for interaction with the application layers of other systems.

– Eric Evans, Domain-Driven Design (2004), p.70

Both Evans, and later, Vernon, describe the application layer responsibility as one of “coordination”. The layer makes use of domain objects, domain services, and external services (usually via gateways) to accomplish some business meaningful task. In practice, it’s also responsible for managing transaction lifetimes, as it knows when a business task begins, and when it ends… but we’ll stop here, as DDD is a FUCKING HUGE topic and this post is long enough as it is.

The thing is that, after working for a while in .NET development, I’ve come to learn that having a separate application layer, even in non-DDD projects, is worth the minor effort required to setup one; the clarity that comes with not-intermingling HTTP, Flask, and i12n related things with what our application is supposed to solve, and the ease of testing that comes from it is indeed worth it.

An application function can be as simple as this (source):

def send_password_reset_email(email):

"""

Send a password reset link to the given email, but only if the email

is associated with an user.

:param email: User e-mail address

:type email: str

"""

user = User.query.filter_by(email=email).first()

if user is None:

return

reset_token = user.serialize_token()

ctx = {'user': user, 'reset_token': reset_token}

send_template_message(subject='DNShare.org account password reset',

recipients=[user.email],

template='user/mail/password_reset', ctx=ctx)

Or more complex like in this other example (source):

def register_subdomain(subdomain, domain, host_ip, owner_ip, owner_acc=None):

"""Register a subdomain for an anonymous user.

Args:

subdomain (str): The subdomain to register.

domain (str): The domain under which the subdomain will be created.

Currently it can be either 'dnshare.org' or 'dnshare.club'.

host_ip (str): IPv4 formatted IP address to which the

subdomain.domain will resolve.

owner_ip (str): IPv4 formatted IP address that'll be able to make

further changes to this registration.

owner_acc (User): The currently logged user, if any.

Returns:

OperationResult if the registration is successful, with result

being 'ok' and data being None.

If there was a still active registration which was overriden,

result will be 'reregistration' and 'data' will correspond

to the previously active host_ip.

Raises:

dnshare.blueprints.default.application.SubdomainAlreadyTaken: If the

combination of subdomain and domain is already taken and currently

active, and its owner is different from owner_ip.

dnshare.gateways.InvalidSubdomainError: If the subdomain isn't valid.

dnshare.gateways.DomainNotManagedError: If the domain isn't managed by

DNShare.

dnshare.gateways.InvalidIPv4HostAddress: If host_ip isn't a valid IPv4

IP address.

"""

try:

existing_dr = DomainRegistration.query \

.filter_by(subdomain=subdomain, domain=domain, is_active=True) \

.one_or_none()

# If there's an active registration from someone else, STOP.

if existing_dr:

dr_owner = existing_dr.user

if dr_owner and (not owner_acc or dr_owner.id != owner_acc.id):

raise SubdomainAlreadyTaken(f'{subdomain}.{domain}')

elif existing_dr.creator_ip != owner_ip:

raise SubdomainAlreadyTaken(f'{subdomain}.{domain}')

if existing_dr is None:

_raise_error_if_daily_registration_limit_surpassed(owner_ip)

utc_now = datetime.utcnow()

expiration_date_utc = utc_now + timedelta(days=7)

registration = DomainRegistration(

subdomain=subdomain,

domain=domain,

host_ip=host_ip,

creator_ip=owner_ip,

creation_date_utc=utc_now,

expiration_date_utc=expiration_date_utc,

is_active=True,

user=owner_acc)

db.session.add(registration)

api_key = _config["DIGITALOCEAN_API_KEY"]

gateway = DigitalOceanGateway(api_key)

new_record = gateway.create_a_record(domain, subdomain, host_ip)

registration.id = new_record["id"]

if existing_dr is not None:

_invalidate_registration(existing_dr)

db.session.commit()

except Exception:

db.session.rollback()

raise

Here we see how the function is responsible for the transaction lifetime, and also reminds me that I’m due for an explanation regarding the raising of exceptions to signal expected failures.

Exceptions as signals of failure

The application layer must be able to signal success and failure statuses to its client. Success can be easily communicated by letting the method finish normally, but the issue is about signalling failure. There at least three options for doing so:

- Return an error code.

- Represent possible results as enum clsases, or as an ad-hoc class hierarchy, depending on your language capabilities.

- Raise/Throw exceptions.

Error codes are acceptable in C, and enums aren’t that bad, if not for that you could program your client for handling only the success case and nothing else. Then you risk displaying to the user a success page when the operation wasn’t actually a success. That leaves us only with the option of raising exceptions to force the client developer (often ourselves) to not be lazy.

I repeat: If you return error codes, or even create a hierarchy of success and error responses, then you could easily ignore those error responses and show the user a success page despite the operation failing.

Given that in Python is already common to use exceptions to signal failures, even if they aren’t exceptional, then using them for normal application flow isn’t that heretical, really.

Example application layer exception (source):

class SubdomainAlreadyTaken(Exception):

def __init__(self, message):

super().__init__(message)

Raising events in Python

It may happen that you need to inform the caller about something as it happens. Python doesn’t have the concept of “events”, so the common answer is to install a third party library, blinker. I won’t cover the usage of that library, but examples of use can be seen at two places in DNShare:

- Before registering a subdomain, a handler for when an old registration is overriden is “connected”. Source

- If a subdomain registration happens, then a signal is “sent”. Source

Configuration settings

As said in the model.py module section, this is somewhat of a project wide decision. If you’re a-OK with intermingling Flask with your non-flask specific requirements, then go ahead an import flask.current_app and then you would have access to Flask app config.

I’m in the camp that believes it to be an acceptable tradeoff, as Dependency Injection is often required in languages like Java and C# for testing and runtime polymorphism. In Python, with monkeypatching and mocks you can test pretty much anything, and Python’s duck typing is how Python does polymorphism.

Templates per blueprint

This is optional, and a matter of preference, mostly.

By default, our templates are placed at the templates directory located at the root of our Flask app package. We can create directories inside it for each of our blueprints if we wish, but if you wish to keep your templates inside your blueprint packages, then two things are needed.

First, your Blueprint instance must be initialized with an explicit template_folder keyword argument:

user = Blueprint('user', __name__, template_folder='templates')

Second, create the templates directory as a sibling of wherever you initialized your Blueprint instance. It’s often a good idea to create another directory inside this templates directory to prevent template name collisions, as the lookup for templates will happen in your blueprint specific directory and in the root directory too.

Python third party dependencies

This will be covered in a later post, but basically we have a hierarchy of requirements files for all the different environments we may need (often just dev and prod), and a lockfile.

For now, refer to [https://gitlab.com/cristianof/dnshare/tree/master/requirements][] to see how it works.

Install new dependencies:

# We're assuming you've activated the project's virtualenv

echo mydepname >> requirements/prod.txt # or dev.txt, if you want a dev dependency

pip install -r requirements/prod.txt

pip freeze > requirements/lock.txt

Tests

Use pytest, pytest-mock, and optionally pytest-watch. Just make the tests directory a Python package and you can import anything else from the project, if you run your tests from the root of the project. No need for weird PYTHONPATH black magicks, and unless you’re planning to deploy your webapp in several different environments, Tox testing is unnecessary.

Fast tests, and slow tests

Separate your unit from your integration tests.

Inside your tests package, create an integration package, and a unit package. In the root of your project then add the pytest.integration.ini and pytest.unit.ini files. Optionally create a pytest.ini file for running all the tests.

# pytest.ini

[pytest]

testpaths = tests/

# pytest.integration.ini

[pytest]

testpaths = tests/integration/

# pytest.unit.ini

[pytest]

testpaths = tests/unit/

Running tests

Tests will be ran using make. We’ll build a Makefile in a later section.

Frontend assets

The static Flask directory isn’t modified by outselves. It’s just the output directory for whatever we do with Webpack. Take a loot at the following:

- package.json: it also includes

bulma, which you can remove if you don’t like CSS frameworks. - webpack.config.js: It allows for SCSS entrypoints, and anything you place inside the assets directory that’s not inside the assets/scss directory gets copied as is to the output directory.

By changing the config.output.path setting in webpack.config.js it can be used in other projects.

Finally, use yarn, not npm. Yarn is faster, it doesn’t assume that every project is a NodeJS package (no bloated package.json), and I believe it’s more secure too.

Storing environment variables in .env

As we use environment variables instead of a settings.py file, we need to store those environment variables in some place that’s NOT IN VERSION CONTROL. A common practice is to create a .env file and place key=value pairs in there.

FLASK_APP=dnshare

FLASK_ENV=development

POSTGRES_USER=example

POSTGRES_PASSWORD=hunter2

[...]

MAIL_DEFAULT_SENDER="DNShare.org <example@example.com>"

How to load those values into the environment depends on your current shell.

Docker and docker-compose

I prefer Docker for packaging and deployment of my Flask applications. Often something like the following (source) is enough for that:

FROM python:3.6.5-slim

ENV INSTALL_PATH /app

RUN mkdir -p ${INSTALL_PATH}

VOLUME ${INSTALL_PATH}/instance

WORKDIR ${INSTALL_PATH}

COPY . .

RUN pip install -r requirements/prod.txt

CMD gunicorn -b 0.0.0.0:8966 --access-logfile - --error-logfile - "dnshare:create_app()"

Docker-Compose is another nifty tool for development, you can easily setup services like PostgreSQL and then dispose of them when you no longer need them.

Yet another example taken from DNShare (source):

version: '2'

services:

gunicorn:

build: .

volumes:

- './instance/:/app/instance/'

ports:

- '8000:8966'

env_file: '.env'

postgres:

image: 'postgres:10'

env_file: '.env'

volumes:

- 'postgres:/var/lib/postgresql/data'

ports:

- '5432:5432'

volumes:

postgres:

Make & Makefile

We use make for two important reasons:

- Building and pushing (to some Docker image registry) Docker images.

- Running tests from the command line.

In some projects we also use it for deployments to production.

Building and pushing Docker images

NAME := ${DOCKER_USER}/dnshare

TAG := $$(git log -1 --pretty=%h)

IMG := ${NAME}:${TAG}

LATEST := ${NAME}:latest

build:

@yarn build

@docker image build -t ${IMG} -t ${LATEST} .

push:

@docker push ${NAME}

login:

@docker login -u ${DOCKER_USER} -p ${DOCKER_PASS}

With this, a new Docker image is built tagged with the short hash of the latest commit in the current Git branch upon running make build. make push would then try to push that image to Docker Hub, but it would fail unless we ran make login at some earlier point.

Basically, make login && make build push would sign us in, build the Docker image and push it to Docker Hub with just one command. Isn’t that great?

Running tests

ptw:

.venv/bin/ptw --nobeep --clear --spool 100 --runner ".venv/bin/pytest -v -c pytest.unit.ini"

test:

.venv/bin/pytest -v

make ptw runs Pytest-Watch, which runs all the specified tests when a project file changes. Excellent for TDD.

make test runs all the tests.

Simple deployment to single remote host

If we have a single remote host, and we don’t require any kind of CI/CD setup (maybe it’s a hobby project) then we can deploy to production by recreating the Docker container based on the latest image in the remote host. Example follows.

First, production docker-compose script

Filename: dnshare.docker-compose.yml

version: '2'

services:

gunicorn:

image: sinenie/dnshare

volumes:

- '/home/max/dnshare/instance/:/app/instance/'

env_file: 'environment'

network_mode: 'host'

Next, deployment script

#!/bin/sh

# This is an example deployment script apt for personal projects deployed in a single

# remote host. It's most likely not appropiate for production deployments.

cd dnshare

docker image pull sinenie/dnshare:latest

docker-compose -f dnshare.docker-compose.yml down

docker-compose -f dnshare.docker-compose.yml up -d

Finally, make target

Add to Makefile.

SERVER := ${SSH_USER}@${SSH_HOST}

deploy:

@scp dnshare.docker-compose.yml ${SERVER}:dnshare/

@cat deploy.sh | ssh ${SERVER}

Then deployment is just a matter of running: make login && make build push && make deploy and waiting.

Quickstart with cookiecutter-flask-mpa

As setting up all of this by hand is a chore, it’s highly advisable to install cookiecutter and just run cookiecutter gl:cristianof/cookiecutter-flask-mpa. This will use cookiecutter-flask-mpa to set the directory structure for you.

Forking the cookiecutter for setting your own defaults and making all the changes you need to make it your own may be a good idea.

Closing notes

Is all of this the true answer? No, I don’t think that I’m 100% right on all points. Except about tagging Docker images based on the latest Git hash. That’s objectively a good idea.